I’ve been using Linode for many years to host what I consider to be my most “production grade” self-hosted services, namely this blog and my mail server. My initial Linode server was built in 2011 on CentOS 6. This is approaching end of life so, I’ve been starting to build its replacement. Since originally building this server my home network has grown up and now provides a myriad of services. When starting out to build the new server, I thought it would be nice to be able to make use of these more easily from my remote servers. So I’ve begun some work to integrate the two networks more closely.

Integration Points

There are a few integration points I’m targeting here, some of which I’ve done already and others are still to be done:

- Get everything onto the same network, or at least on different subnets of my main network so I can control traffic between networks via my pfSense firewall. This gives me the major benefit of being able to access selected services on my local network from the cloud without having to make that service externally accessible. In order to do this securely you want to make sure the connection is encrypted – i.e. you want a VPN. I’m using OpenVPN.

- Use ZFS snapshots for backing up the data on the remote systems. I’d previously been using plain old rsync for copying the data down locally where it gets rolled into my main backups using restic. There is nothing wrong with this approach, but using ZFS snapshots gives more flexibility for restoring back to a certain point without having to extract the whole backup.

- Integrate into my

check_mksetup for monitoring. I’m already doing this and deploying the agent via Ansible/CI. However, now the agent connection will go via the VPN tunnel (it’s SSH anyway, so this doesn’t make a huge difference). - Deploy the configuration to everything with Ansible and Gitlab CI – I’m still working on this!

- Build a centralised logging server and send all my logs to it. This will be a big win, but sits squarely in the to-do column. However, it will benefit from the presence of the VPN connection, since the syslog protocol isn’t really suitable for running over the big-bad Internet.

Setting Up OpenVPN

I’m setting this up with the server being my local pfSense firewall and the clients being the remote cloud machines. This is somewhat the reverse to what you’d expect, since the remote machines have static IPs. My local IP is dynamic, but DuckDNS does a great job of not making this a problem.

The server setup is simplified somewhat due to using pfSense with the OpenVPN Client Export package. I’m not going to run through the full server setup here – the official documentation does a much better job. One thing worth noting is that I set this up as the second OpenVPN server running on my pfSense box. This is important as I want these clients to be on a different IP range, so that I can firewall them well. The first VPN server runs my remote access VPN which has unrestricted access, just as if I were present on my LAN.

In order to create the second server, I just had to select a different UDP port and set the IP range I wanted in the wizard. It should also be noted that the VPN configuration is set up not to route any traffic through it by default. This prevents all the traffic from the remote server trying to go via my local network.

On the client side, I’m using the standard OpenVPN package from the Ubuntu repositories:

$ sudo apt install openvpnAfter that you can extract the configuration zip file from the server and test with OpenVPN in your terminal:

$ unzip <your_config>.zip

$ cd <your_config>

$ sudo openvpn --config <your_config>.ovpnAfter a few seconds you should see the client connect and should be able to ping the VPN address of the remote server from your local network.

Always On VPN Connection

To make this configuration persistent we first move the files into /etc/openvpn/client, renaming the config file to give it the .conf extension:

$ sudo mv <your_config>.key /etc/openvpn/client.key

$ sudo mv <your_config>.p12 /etc/openvpn/client.p12

$ sudo mv <your_config>.ovpn /etc/openvpn/client.confYou’ll want to update the pkcs12 and tls-auth lines to point to the new .p12 and .key files. I used full paths here just to makes sure it would work later. I also added a route to my local network in the client config:

route 10.0.0.0 255.255.0.0You should then be able to activate the OpenVPN client service via systemctl:

$ sudo systemctl start openvpn-client@client.service

$ sudo systemctl enable openvpn-client@client.serviceIf you check your system logs, you should see the connection come up again. It’ll now persist across reboots and should also reconnect if the connection goes down for any reason. So far it’s been 100% stable for me.

At this point I added a DNS entry on my pfSense box to allow me to access the remote machine via it’s hostname from my local network. This isn’t required, but it’s quite nice to have. The entry points to the VPN address of the machine, so all traffic will go via the tunnel.

Firewall Configuration

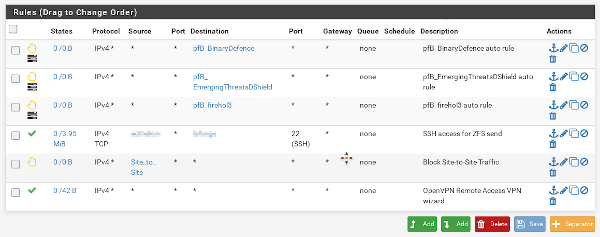

Since these servers have publicly available services running on them, I don’t want them to have unrestricted access to my local network. Therefore, I’m blocking all incoming traffic from the new VPN’s IP range in pfSense. I’ll then add specific exceptions for the services I want them to access. This is pretty much how you would set up a standard DMZ.

To do this I added an alias for the IP range in question and then added a block rule on the OpenVPN firewall tab in pfSense. This is slightly different to the way my DMZ is set up, since I don’t want to block all traffic on the OpenVPN interface, just traffic from that specific IP range (to allow my remote access VPN to continue working!).

You’ll probably also want to configure the remote server to accept traffic from the VPN so that you can access any services on the server from your local network. Do this with whatever Linux firewall tool you usually use (I use ufw).

Storing Data on ZFS

And now for something completely different….

As discussed before, I was previously backing up the data on these servers with rsync. However, I was missing the snapshotting I get on my local systems. These local systems mount their data directories via NFS to my main home server, which then takes care of the snapshot duties. I didn’t want to use NFS over the VPN connection for performance reasons, so I opted for local snapshots and ZFS replication.

In order to mount a ZFS pool on our cloud VM we need a device to store our data on. I could add some block storage to my Linodes (and I may in future), but I can also use a loopback file in ZFS (and not have to pay for extra space). To do this I just created a 15G blank file and created the zpool on top of that:

$ sudo mkdir /zpool

$ sudo dd if=/dev/zero of=/zpool/storage bs=1G count=15

$ sudo apt install zfsutils-linux

$ sudo zpool -m /storage storage /zpool/storageI can then go about creating my datasets (one for the mail storage and one for docker volumes):

sudo zfs create storage/mail

sudo zfs create storage/docker-dataAutomating ZFS Snapshots

To automate my snapshots I’m using Sanoid. To install it (and it’s companion tool Syncoid) I did the following:

$ sudo apt install pv lzop mbuffer libconfig-inifiles-perl libconfig-inifiles-perl git

$ git clone https://github.com/jimsalterjrs/sanoid

$ sudo mv sanoid /opt/

$ sudo chown -R root:root /opt/sanoid

$ sudo ln /opt/sanoid/sanoid /usr/sbin/

$ sudo ln /opt/sanoid/syncoid /usr/sbin/Basically all we do here is install a few dependencies and then download Sanoid and install it in /opt. I then hard link the sanoid and syncoid executables into /usr/sbin so that they are on the path. We then need to copy over the default configuration:

$ sudo mkdir /etc/sanoid

$ sudo cp /opt/sanoid/sanoid.conf /etc/sanoid/sanoid.conf

$ sudo cp /opt/sanoid/sanoid.defaults.conf /etc/sanoid/sanoid.defaults.confI then edited the sanoid.conf file for my setup. My full configuration is shown below:

[storage/mail]

use_template=production

[storage/docker-data]

use_template=production

recursive=yes

#############################

# templates below this line #

#############################

[template_production]

frequently = 0

hourly = 36

daily = 30

monthly = 12

yearly = 2

autosnap = yes

autoprune = yesThis is pretty self explanatory. Right now I’m keeping loads of snapshots, I’ll pare this down later if I start to run out of disk space. The storage/docker-data dataset has recursive snapshots enabled because I will most likely make each Docker volume its own dataset.

This is all capped off with a cron job in /etc/cron.d/zfs-snapshots:

* * * * * root TZ=UTC /usr/local/bin/log-output '/usr/sbin/sanoid --cron'Since my rant a couple of weeks ago, I’ve been trying to assemble some better practices around cron jobs. The log-output script is one of these, from this excellent article.

Syncing the Snapshots Locally

The final part of the puzzle is using Sanoid’s companion tool Syncoid to sync these down to my local machine. This seems difficult to do in a secure way, due to the permissions that zfs receive needs. I tried to use delegated permissions, but it looks like the mount permission doesn’t work on Linux.

The best I could come up with was to add a new unprivileged user and allow it to only run the zfs command with sudo by adding the following via visudo:

syncoid ALL=(ALL) NOPASSWD:/sbin/zfsI also set up an SSH key on the remote machine and added it to the syncoid user on my home server. Usually I would restrict the commands that could be run via this key for added security, but it looks like Syncoid does quite a bit so I wasn’t sure how to go about this (if any one has any idea let me know).

With that in place we can test our synchronisation:

$ sudo syncoid -r storage/mail syncoid@<MY HOME SERVER>:storage/backup/mail

$ syncoid -r storage/docker-data syncoid@<MY HOME SERVER>:storage/docker/`hostname`For this to work you should make sure that the parent datasets are created on the receiving server, but not the destination datasets themselves, Syncoid likes to create them for you.

I then wrote a quick script to automate this which I dropped in /root/replicator.sh:

#!/bin/bash

USER=syncoid

HOST=<MY HOME SERVER>

HOSTNAME=$(hostname)

/usr/sbin/syncoid -r storage/mail $USER@$HOST:storage/backup/mail 2>&1

/usr/sbin/syncoid -r storage/docker-data $USER@$HOST:storage/docker/$HOSTNAME 2>&1Then another cron job in /etc/cron.d/zfs-snapshots finishes the job:

56 * * * * root /usr/local/bin/log-output '/root/replicator.sh'Conclusion

Phew! There was quite a bit there. Thanks for sticking with me if you made it this far!

With this setup I’ve come a pretty long way towards my goal of better integrating my remote servers. So far I’ve only deployed this to a single server (which will become the new mailserver). There are a couple of others to go, so the next step will be to automate as much as possible of this via Ansible roles.

I hope you’ve enjoyed this journey with me. I’m interested to hear how others are integrating remote and local networks together. Let me know if you have anything to add via the feedback channels.

Leave a Reply