In my recent post on synchronising ZFS snapshots from remote servers, I mentioned that I had being using rsync for the same purpose. This is part of my larger overall backup strategy with restic. It was brought to my attention recently that I hadn’t actually written up my backup approach. This post will rectify that!

The key requirement of my system was to have something that would work across multiple systems, without being too difficult to maintain. I also wanted it to scale to new systems easily as my self-hosting infrastructure inevitably continues to grow. Of course, I had the usual requirements of local and off site backups, with the off site copy suitably secured. Restic fits the bill quite nicely for secure local and remote backups, but has no way to synchronise multiple systems unless you set it up on each system individually.

Backup Architecture

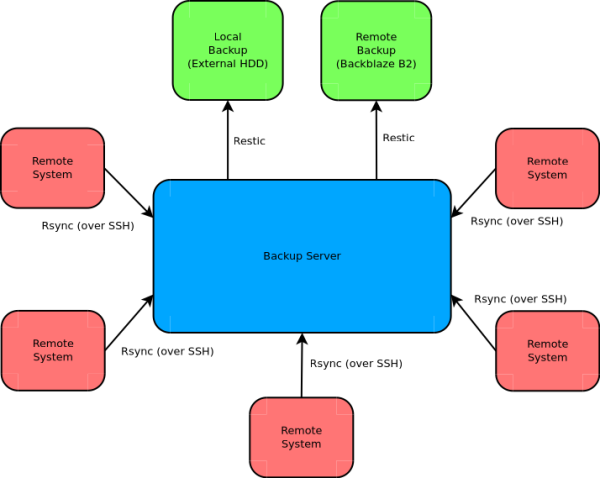

I’ve architected my backups as a centralised system, where the relevant data from each satellite system is propagated to a central server and then backed up to various end points from there. This architecture was chosen because it was reasonably easy for me to setup and maintain and actually results in more copies of the data since it has to be copied to the backup server first.

As you can see from the diagram the synchronisation from the remote systems to the backup server is done via rsync. This is done in a pull fashion. The backup server connects to each machine in turn and pulls down the files to be backed up to it’s local cache.

The second stage is a backup using restic to both a locally connected external hard drive and to the cloud (in this case Backblaze B2). I’ll cover each of these steps in the following sections.

Synchronising Remote Machines with Rsync

The first step is to synchronise the relevant files on the remote machines via rsync. When I say remote machines here, I specifically mean machines which are not the central backup server. These could be remote cloud machines, hosts on the local network or VMs hosted on the same machine. In my case it’s all three, since I run the backups on my main home server.

For each machine I want to synchronise, I have a script looking like this:

#!/bin/bash

HOST=<REMOTE HOSTNAME>

PORT=22

USER=backup

SSH_KEY="/storage/data/backup/keys/backup_key"

BASE_DEST=/storage/data/backup/$HOST

LOG_DIR=/storage/data/backup/logs

LOG_FILE=$LOG_DIR/rsync-$HOST.log

function do_rsync() {

echo "Starting rsync job for $HOST:$1 at $(date '+%Y-%m-%d %H:%M:%S')..." >> $LOG_FILE 2>&1

echo >> $LOG_FILE 2>&1

mkdir -p $BASE_DEST$1 >> $LOG_FILE 2>&1

/usr/bin/rsync -avP --delete -e "ssh -p $PORT -i $SSH_KEY" $USER@$HOST:$1 $BASE_DEST$1 >> $LOG_FILE 2>&1

echo >> $LOG_FILE 2>&1

echo "Job finished at $(date '+%Y-%m-%d %H:%M:%S')." >> $LOG_FILE 2>&1

}

mkdir -p $LOG_DIR

do_rsync <DIRECTORY 1>

do_rsync <DIRECTORY 2>

...Here we start with some basic configuration, including the hostname, port, user and SSH key to use to connect to the remote host. I then configure the local destination directory, which is located on my main ZFS mirror. I also configure where the logs will be stored.

We then get into the main function of the script, called do_rsync. This sets up the logging environment and does the actual rsync transfer with the options we’ve specified. It takes as an argument the remote directory to backup (which obviously must be readable by the user in question).

We then close out the script by ensuring the log directory exists and then calling the do_rsync function for the directories we are interested in. Looking at the backup scripts now it would actually be good to factor out the common functionality here into a helper script. This could then be sourced by all of the host specific scripts. I also need to move this into git which will happen with my continued migration to Ansible.

A Note About Security

Obviously, with the rsync client logging in to the remote system automatically via SSH it’s good to restrict what this can do. To this end, the SSH key is locked down so that the only command that can be run is that executed by the rsync client. This is done via the ~/.ssh/authorized_keys file:

command="/usr/bin/rsync --server --sender -vlogDtpre.is . ${SSH_ORIGINAL_COMMAND//* \//\/}",no-pty,no-agent-forwarding,no-port-forwarding,no-X11-forwarding ssh-rsa .....These backup scripts are run from cron and spread throughout the day so as not to overlap with each other, in an effort to even out the traffic on the network. I’m not really happy with this part of the solution, it might just be better to run the whole lot in sequence.

Local Restic Backups

The next step in the process is to run the backup to the locally connected external drive with restic. This backup is run over all the previously synced data as well as data from the local machine, such as the contents of my Nextcloud server and media collection.

This backup is achieved with the following script:

#!/bin/bash

set -e

######## START CONFIG ########

DISK_UUID="<DISK UUID>"

#GLOBAL_FLAGS="-q"

MOUNT_POINT=/mnt/backup

export RESTIC_REPOSITORY=$MOUNT_POINT/restic/storage

export RESTIC_PASSWORD_FILE=/root/restic-local.pass

######## END CONFIG ########

echo "Starting backup process at $(date '+%Y-%m-%d %H:%M:%S')."

# check for the backup disk and mount it

if [ ! -e /dev/disk/by-uuid/$DISK_UUID ]; then

echo "Backup disk not found!" >&2

exit 1

fi

echo "Mounting backup disk..."

mount -t ext4 /dev/disk/by-uuid/$DISK_UUID $MOUNT_POINT

# pre-backup check

echo "Performing pre-backup check..."

restic $GLOBAL_FLAGS check

# perform backups

echo "Performing backups..."

restic $GLOBAL_FLAGS backup /storage/data/nextcloud

restic $GLOBAL_FLAGS backup /storage/data/backup

restic $GLOBAL_FLAGS backup /storage/music

restic $GLOBAL_FLAGS backup /storage/media

# add any other directories here...

# post-backup check

echo "Performing post backup check..."

restic $GLOBAL_FLAGS check

# clean up old snapshots

echo "Cleaning up old snapshots..."

restic $GLOBAL_FLAGS forget -d 7 -w 4 -m 6 -y 2 --prune

# final check

echo "Performing final backup check..."

restic $GLOBAL_FLAGS check

# unmount backup disk

echo "Unmounting backup disk..."

umount $MOUNT_POINT

echo "Backups completed at $(date '+%Y-%m-%d %H:%M:%S')."

exit 0This script is pretty simple, despite the wall of commands. First we have some configuration in which I specify the UUID of the external disk and the mount point of the disk. This is done because the disk is kept unmounted when not in use. The path to the restic repository, relative to the mount point and the path to the password file are also specified.

We then move into checking for and mounting the external disk. The first restic command performs a check on the repository to make sure all is well, before getting into the backups for the directories we are interested in. This is followed by another check to make sure that went OK.

I then run a restic forget command to prune old snapshots. Currently I’m keeping the last 7 days of backups, 4 weekly backups, 6 monthly backups and 2 yearly backups! I run a final restic check before unmounting the external disk.

This script is run once a day from cron. I use the following command to reduce the priority of the backup script and avoid interfering with the normal operation of the server:

/usr/bin/nice -n 19 /usr/bin/ionice -c2 -n7 /storage/data/backup/restic-local.sh >> /storage/data/backup/logs/restic-local.logRemote Restic Backups

The final stage in this process is a separate backup to the cloud. As mentioned before I use Backblaze’s B2 service for this since it seems to be about the cheapest around. I’ve been reasonably happy with it so far at least.

#!/bin/bash

set -e

######## START CONFIG ########

B2_CONFIG=/root/b2_creds.sh

#GLOBAL_FLAGS="-q"

export RESTIC_REPOSITORY="<MY B2 REPO>"

export RESTIC_PASSWORD_FILE=/root/restic-remote-b2.pass

######## END CONFIG ########

echo "Starting backup process at $(date '+%Y-%m-%d %H:%M:%S')."

# load b2 config

source $B2_CONFIG

# pre-backup check

echo "Performing pre-backup check..."

restic $GLOBAL_FLAGS check

# perform backups

echo "Performing backups..."

restic $GLOBAL_FLAGS backup /storage/data/nextcloud

restic $GLOBAL_FLAGS backup /storage/data/backup

restic $GLOBAL_FLAGS backup /storage/music

# This costs to much to backup, but it's not the end of the world

# if I lose a load of DVD rips!

#restic $GLOBAL_FLAGS backup /storage/media

# post-backup check

echo "Performing post backup check..."

restic $GLOBAL_FLAGS check

# clean up old snapshots

echo "Cleaning up old snapshots..."

restic $GLOBAL_FLAGS forget -w 8 -m 12 -y 2 --prune

# final check

echo "Performing final backup check..."

restic $GLOBAL_FLAGS check

echo "Backups completed at $(date '+%Y-%m-%d %H:%M:%S')."

exit 0This looks very similar to the previous script, but differs in the configuration. First I specify the file where I keep my B2 credentials, to be sourced later. This file is of the form:

export B2_ACCOUNT_ID="<MY ACCOUNT ID>"

export B2_ACCOUNT_KEY="<MY ACCOUNT KEY>"I then set the RESTIC_REPOSITORY and RESTIC_PASSWORD_FILE variables as before. In this case the repository is of the form b2:bucketname:path/to/repo.

The snapshot retention policy here is different, with 8 weekly backups, 12 monthly backups and 2 yearly backups retained. This is mostly because I only run this backup once per week – the backup script will actually take more than 24 hours to run with all the checking and forgetting thrown in! The script is run from cron with the same nice/ionice combination as the local backup.

Conclusion

With all that in place, I have a pretty comprehensive backup system. The system stores at least 3 copies of any data (live, local backup, remote backup) and in the case of remote systems 4 copies (live, backup server cache, local backup, remote backup). The main issue I have with this setup currently is the use of the local external disk, which I don’t like being connected to the same server. Hopefully I’ll be moving this to another machine in my next round of server upgrades.

I also don’t really like the reliance on the cloud, even though I’ve got no complaints about the B2 service. My ideal system would probably be an SBC based system located at the home of someone with a fast, non-data capped internet connection. Ideally this person would also live on a different continent! I could then run a Minio server in place of the B2 service. This would probably end up cheaper in the long run, since I’m paying nearly $10/month for the current service.

One last piece of sage advice: TEST YOUR BACKUPS! They are worth nothing if you don’t know they are working. I’ve done a couple of test restores with this system, but I’m probably due for another one.

What’s your backup routine like? Got improvements for my system? Feel free to share them in the comments.

Leave a Reply