This post is part of a series on this project. Here is the series so far:

In the course of migrating most of the services I run over to running as Docker containers I’ve mostly tried to steer clear of building my own images. Thanks to the popularity of Docker this has been pretty easy for the most part. Many projects now ship an official Docker image as part of their releases. However, the inevitable happened when I had to build my own image in my recent TT-RSS migration.

The reason for my reluctance to build my own images is not some unfounded fear of Dockerfiles. It’s not building the images that I’m worried about, it’s maintaining them over time.

Every Docker image contains a mini Linux distribution, frozen in time from the day the image was built. Like any distribution of Linux these contain many software packages, some of which are going to need an update sooner or later in order to maintain their security. Of course you could just run the equivalent of an apt upgrade inside each container, but this defeats some of the immutability and portability guarantees which Docker provides.

The solution to this, is basically no more sophisticated than ‘rebuild your images and re-deploy’. The problem is no more help is offered. How do you go about doing that in a consistent and automated way?

Enter GitLab CI

If you have been following this blog you’ll know I’m a big fan of GitLab CI for these kinds of jobs. Luckily, GitLab already has support for building Docker images. The first thing I had to do was get this set up on my self-hosted GitLab runner. This was complicated by the fact that my runner was already running as a Docker container. It’s basically trying to start a Docker-in-Docker container on the host, from inside a Docker container, which is a little tricky (if you want to go further down, Docker itself is running from a Snap on a Virtual Machine! It really is turtles all the way down).

After lots of messing around and tweaking, I finally came up with a runner configuration which allowed the DinD service to start and the Docker client in the CI build to see it:

concurrent = 4

check_interval = 0

[session_server]

session_timeout = 1800

[[runners]]

name = "MyRunner"

url = "https://gitlab.com/"

token = "insert your token"

executor = "docker"

[runners.custom_build_dir]

[runners.docker]

tls_verify = false

image = "ubuntu:18.04"

privileged = true

disable_entrypoint_overwrite = false

oom_kill_disable = false

disable_cache = false

environment = ["DOCKER_DRIVER=overlay2", "DOCKER_TLS_CERTDIR=/certs"]

volumes = ["/certs/client", "/cache"]

shm_size = 0

dns = ["my internal DNS server"]

[runners.cache]

[runners.cache.s3]

[runners.cache.gcs]

[runners.custom]

run_exec = ""The important parts here seem to be that the privileged flag must be true in order to allow the starting of privileged containers and that the DOCKER_TLS_CERTDIR should be set to a known location. The client subdirectory of this should then be shared as a volume in order to allow clients to connect via TLS.

You can ignore any setting of the DOCKER_HOST environment variable (unless you are using Kubernetes, maybe?). It should be populated with the correct value automatically. If you set it you will most likely get it wrong and the Docker client won’t be able to connect.

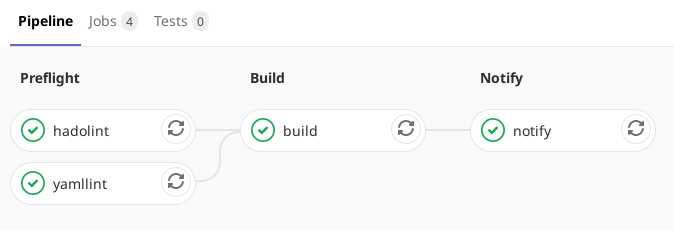

The CI Pipeline

I started the pipeline with a couple of static analysis jobs. First up is my old favourite yamllint. The configuration for this is pretty much the same as previously described. Here it’s only really checking the .gitlab-ci.yml file itself.

Next up is a new one for me. hadolint is a linting tool for Dockerfiles. It seems to make sensible suggestions, though I disabled a couple of them due to personal preference or technical issues. Conveniently, there is a pre-built Docker image, which makes configuring this pretty simple:

hadolint:

<<: *preflight

image: hadolint/hadolint

before_script:

- hadolint --version

script:

- hadolint DockerfileBuilding the Image

Next comes the actual meat of the pipeline, building the image and pushing it to our container registry:

variables:

IMAGE_TAG: registry.gitlab.com/robconnolly/docker-ttrss:latest

build:

stage: build

image: docker:latest

services:

- docker:dind

before_script:

- docker info

script:

- docker login -u gitlab-ci-token -p $CI_BUILD_TOKEN registry.gitlab.com

- docker build -t $IMAGE_TAG .

- docker push $IMAGE_TAG

tags:

- dockerThis is relatively straightforward if you are already familiar with the Docker build process. There are a couple of GitLab specific parts such as starting the docker:dind service, so that we have a usable Docker daemon in the build environment. The login to the GitLab registry uses the built-in $CI_BUILD_TOKEN variable as the password and from there we just build and push using the $IMAGE_TAG variable to name the image. Simple!

I also added another pipeline stage to send me a notification via my Gotify server when the build is done. This pretty much just follows the Gotify docs to send a message via curl:

notify:

stage: notify

image: alpine:latest

before_script:

- apk add curl

script:

- |

curl -X POST "https://$GOTIFY_HOST/message?token=$GOTIFY_TOKEN" \

-F "title=CI Build Complete" \

-F "message=Scheduled CI build for TT-RSS is complete."

tags:

- dockerOf course the $GOTIFY_HOST and $GOTIFY_TOKEN variables are defined as secrets in the project configuration.

You can find the full .gitlab-ci.yml file for this pipeline in the docker-ttrss project repository.

Scheduling Periodic Builds

GitLab actually has support for scheduled jobs built in. However, if you are running on gitlab.com as I am you will find that you have very little control over when your jobs actually run, since it runs all cron jobs at 19 minutes past the hour. This would be fine for this single job, but I’m planning on having more scheduled builds run in the future, so I’d like to distribute them in time a little better, in order to reduce the load on my runner.

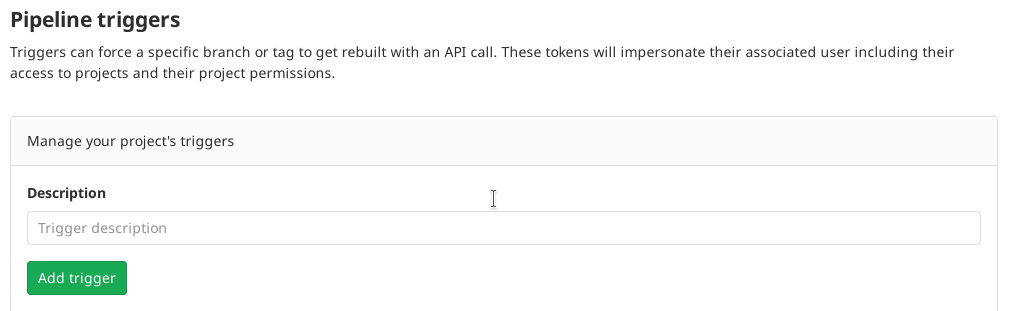

In order to do this I’m using a pipeline trigger, kicked off by a cron job at my end:

# Run TT-RSS build on Tuesday and Friday mornings

16 10 * * 2,5 /usr/bin/curl -s -X POST -F token=SECRET_TOKEN -F ref=master https://gitlab.com/api/v4/projects/2707270/trigger/pipeline > /dev/nullThis will start my pipeline at 10:16am (NZ time) on Tuesdays and Fridays. If the build is successful there will be a new image published shortly after these times.

An Aside: Managing random cron jobs like these really sucks once you have more than one or two machines. More than once I’ve lost a job because I forgot which machine I put it on! I know Ansible can manage cron jobs, but you are still going to end up with them configured across multiple files. I also haven’t found any way of managing cron jobs which relate to a container deployment (e.g. running WordPress cron for a containerised instance). Currently I’m managing all this manually, but I can’t help feeling that there should be a better way. Please suggest any approaches you may have in the comments!

Updating Deployed Containers

I could extend the pipeline above to update the container on my server with the new image. However, this would leave the other containers on the server without automated updates. Therefore I’m using Watchtower to periodically check for updates and upgrade any out of date images. To do this I added the following to the docker-compose.yml file on that server:

watchtower:

image: containrrr/watchtower

environment:

WATCHTOWER_SCHEDULE: 0 26,56 10,22 * * *

WATCHTOWER_CLEANUP: "true"

WATCHTOWER_NOTIFICATIONS: gotify

WATCHTOWER_NOTIFICATION_GOTIFY_URL: ${WATCHTOWER_NOTIFICATION_GOTIFY_URL}

WATCHTOWER_NOTIFICATION_GOTIFY_TOKEN: ${WATCHTOWER_NOTIFICATION_GOTIFY_TOKEN}

TZ: Pacific/Auckland

volumes:

- /var/run/docker.sock:/var/run/docker.sock

restart: alwaysHere I’m just checking for container updates four times a day. However, these are divided into two pairs of sequential checks separated by 30 minutes. This is more than sufficient for this server since it’s only accessible from my local networks. One of the update times is scheduled to start ten minutes after my CI build to pick up the new TT-RSS container. The reason for the second check after 30 mins is to ensure that the container gets updated even if the CI pipeline is delayed (as sometimes happens on gitlab.com).

You’ll note that I’m also sending notifications from Watchtower via Gotify. I was pleasantly surprised to discover the Gotify support in Watchtower when I deployed this. However, I think the notifications will get old pretty quickly and I’ll probably disable them eventually.

Conclusion

With all this in place I no longer have to worry about keeping my custom Docker images up to date. They will just automatically build at regular intervals and be updated automatically on the server. It actually feels pretty magical watching this all work end-to-end since there are a lot of moving parts here.

I’ll be deploying this approach as I build more custom images. I also still have to deploy Watchtower on all my other Docker hosts. The good news is that this has cured my reluctance to build custom images, so there will hopefully be more to follow. In the meantime, the community can benefit from a well maintained and updated TT-RSS image. Just pull with the following command:

docker pull registry.gitlab.com/robconnolly/docker-ttrss:latestEnjoy!

Leave a Reply